April 12th, 2019 — Math, Research, Visualization

Mergesort and Quicksort are two well-known sorting algorithms, familiar to both researchers and practitioners. Both are typically presented as examples of divide and conquer. Other than this fact, they are usually not seen to be very closely related. Let’s look at Mergesort and Quicksort in a somewhat unusual way that will make them appear as two sides of the same coin.

Suppose we want to sort a list of items \(a_1, a_2, \dots, a_n\).

In Mergesort, we split the list into two parts: \(L = a_1, \dots, a_{n/2}\), and \(R = a_{n/2+1}, \dots, a_n\). We recursively sort \(L\) and \(R\), then merge the two sorted lists to obtain the final, sorted output.

How do we sort \(L\) and \(R\) recursively? We split them further into equal parts (let’s assume for simplicity that \(n\) is a power of two, so this always works), until parts consist of single elements, which are already trivially sorted.

So, at the bottom of the recursion tree, we have \(n\) singleton-lists, consisting of \(a_1, a_2, \dots, a_n\). Assuming that the splitting has happened “in place”, these items are still stored in their original order, “sorted by their index” \(1,2,\dots,n\).

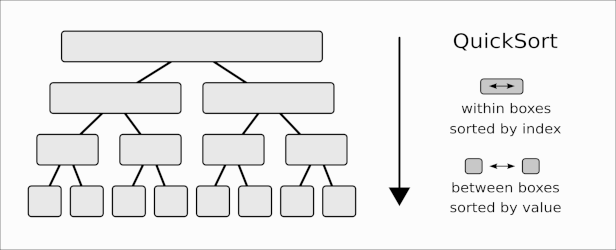

See the following illustration.

The bottom-most line contains the elements in their original order, one in each “box”. As we come out of the recursion, we merge neighboring “boxes” in pairs, putting their elements in the correct order. So within each box, elements are sorted “by value“.

Observe that neighboring boxes in the same line are sorted “by index“, in the sense that their ordering preserves the original order: indices in any box are smaller than indices in the next box to the right. For example in the second line from the bottom, the first box will contain \((a_1, a_2)\) or \((a_2, a_1)\), the next box will contain \((a_3, a_4)\) or \((a_4, a_3)\), etc. the point being that 1 and 2 are both smaller than 3 and 4.

This is Mergesort.

Can we reverse the arrow of time and go from the top towards the bottom? Well, that would be strange, because at the top we have a single box, so this would mean that we start with a single list that is already sorted, which wouldn’t make sense.

But what if by “sorted” we meant “sorted by index“, meaning that the entries are stored as \((a_1, a_2, \dots, a_n)\). There is of course no harm in assuming this, as this is indeed how the input is presented. So let’s update the figure.

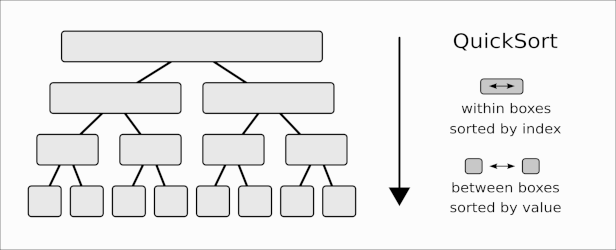

Instead of bottom-to-top, let’s go top-to-bottom. Let’s also swap “by value” and “by index” throughout.

Now we go from a single box, sorted by index (meaning an unsorted input list) to a list of boxes sorted among them by value (meaning a sorted output list in the bottom). So conceptually, this looks very much like a valid sorting algorithm. What is it? Does it have a name?

Yes, it is Quicksort.

Let’s think it through again: we start with a list (sorted “by index”), and we produce from it two lists, sorted between them “by value”. This means that all entries in one are smaller than all entries in the other (this is essentially the partitioning step of Quicksort: we split the elements into those smaller, and those greater or equal than a pivot).

What does it mean that “within a box” the entries are now sorted “by index”? This just means that on both sides of the partitioning we preserve the original ordering of entries. This is not true in every implementation of Quicksort, but it is a technicality we can certainly enforce if we want to. Observe that we assumed that Quicksort splits the list in two equal parts, something we can achieve if we use the median as pivot (in practice of course a random pivot works just fine and is usually faster).

So there you have it. Quicksort and Mergesort are “duals”: you get one from the other by running time backwards, and swapping “by value” and “by index” in the invariants they maintain (or alternatively, swapping “between” and “within”). Yet another way to look at it is that Quicksort does a similar thing as Mergesort, but on the inverse permutation as input.

Surprising? Obvious? Is this view of practical use?

What about other sorting algorithms?

For the latter question, observe that we can extend this view to accommodate unequal splits, or in an extreme case, just splitting off (or merging) one element from/to a longer list. In this view, we obtain (essentially) InsertionSort, respectively SelectionSort, which also appear as duals of each other.

This latter duality extends further, and holds even if we do insertion, resp. selection by fancy data structures, such as self-adjusting binary search trees and adaptive tournaments as heaps. These ideas are explored in a different direction in an article here.

There are also other dualities on ordered sets and data structures (the word “duality” itself is quite overloaded). Exploring dualities often suggests new concepts by symmetry or analogy.

As for the discussion of this post, here is an illustration of both sides.

Illustration of the sorting duality.

August 17th, 2016 — Math, Puzzle

Consider the following sequence. Let the first element be \(a_0 = 0\).

Then, for \(a_i\), when \(i>0\), let’s do the following. Consider the last element \(a_{i-1}\). Did this element appear previously in the sequence? If not (meaning that \(a_{i-1}\) is its first occurrence), set \(a_i\) to \(0\). If \(a_{i-1}\) did appear before, then let \(a_k\) be its previous occurrence. Set \(a_i\) to the value \(i-1-k\) (meaning, “how far back did we see this value?”).

So, we have 0,0,1,0,2,0,2,2,1,6,…

For example, the last 6 is there because the previous element 1 has appeared 6 positions earlier.

This is known as Van Eck’s sequence and appears as A181391 in the wonderful On-line encyclopedia of integer seqences. It has been added to OEIS by Jan Ritsema van Eck in 2010. There are many interesting properties of this sequence (for instance, it was shown that it contains infinitely many zeros), and even more intriguing open questions. For more details see the OEIS entry, or these slides by OEIS founder N.J.A. Sloane.

Here is a variation on the above sequence:

Instead of counting the “number of elements”, let’s count only the “number of distinct elements”. That is, again we start with 0, and then if the last element was new, we write 0, otherwise we write “how many distinct elements” have we seen since its previous appearance.

So the sequence goes: 0,0,1,0,2,0,2,2,1,3,…

For example, the last 3 is there because between the previous element 1 and its last appearance there are 3 distinct elements: 0,1,2.

I thought of this sequence independently, but searching for it on OEIS I found that it has been considered before by Nathaniel Shar, who added it to the OEIS as A268755. See here for a plot.

The rule for defining the sequence is similar to the concept of “working set” in the theory of data structures, which refers exactly to the number of distinct queries seen since the previous occurrence of the last query. Therefore, I think a fitting name for the sequence would be “working set sequence”.

So what can we say about the working set sequence (a.k.a. Shar’s sequence)? Does it also contain an infinite number of zeros, similarly to the Van Eck’s sequence? I generated about half a million elements by computer, and the answer seems yes. It could happen, of course, that at some point the sequence reaches a cycle that it can never escape, such as 1,1,1,1,1,… or the less trivial …,1,2,3,3,1,3,2,3,2,2,1,3,3,… (exercise: check that this is really a cycle!)

However, the computer experiment suggests that this does not happen, and eventually every positive integer appears in the sequence.

Can this be proven formally? Yes! Let’s leave this as an exercise (I added a proof sketch to the OEIS entry). As a hint, an easier property to observe is that \(k\) can appear only after \(0,…,k-1\) have already appeared.

Theorem. The working set sequence contains infinitely many zeros.

Zeros seem to appear much less frequently in the working set sequence than in Van Eck’s sequence. But how frequent are they? Empirically it seems that there is a gap of roughly a constant times \(k\) between the \(k\)th and \(k+1\)th zeros, although nothing like this has been proven. Also, the zeros often come in pairs: if, for a long time there have been no new elements, eventually the new element \(k\) comes, followed by \(0\), and then, since \(0\) has also not appeared for a very long time, there is high chance that \(k+1,0\) follow right away. This seems to be the case quite frequently. Overall, however, nothing seems to be known about the statistics of this sequence, e.g. the asymptotic growth rate of \(a_n\).

The indices of new elements in the working set sequence (or alternatively, the indices of zeros minus one) have been added to OEIS as A275668.

I mentioned above two possible cycles in the sequence (although they don’t actually appear if we start from 0). What is the set of all possible cycles, does it have a nice characterization? How many different cycles are there with \(k\) distinct elements? These questions are open.

Finally, another open question about the working set sequence (as far as I know, the same question is open for the Van Eck’s sequence as well):

Problem. Does every pair of nonnegative integers (a,b) eventually appear in the working set sequence as a pair of consecutive elements? (except for (1,1) of course, which cannot appear).

[NOTE added August 2017] As pointed out by Jan Ritsema van Eck in a comment (see below), the Van Eck sequence also excludes pairs of the type (p,p+1). Prove it as a fun exercise, it is not difficult! So let’s modify the conjecture to also exclude (p,p+1) pairs besides (1,1). The conjecture doesn’t seem extremely plausible to be honest (as Jan points out, there are weaker statements one should prove first), so let’s formulate it as a challenge, to move things forward. Can you find an (a,b) pair not of the form (p,p+1) and not (1,1) that does not appear in the van Eck sequence A181391?

This observation does not apply to the working set sequence. For example, (4,5) appears at positions (21,22). So the similar challenge is: Can you find an (a,b) pair other than (1,1) that does not appear in the working set sequence A268755?

January 3rd, 2016 — Links, Reading

Some of the books I read (and liked) in 2015, and my impressions of them. Previously: 14, 13, 12, 11.

John Maynard Smith, Eörs Szathmáry:

The Origins of Life: From the Birth of Life to the Origin of Language

A very clear explanation of evolution from the chemical basis up to “cultural evolution”. Updated version of older book “Major transitions…” of same authors. Describes evolution at the level of genes, individuals and groups. Often takes an “information-theoretic” view. Doesn’t give the “full picture” of life and evolution but gives a flavour of the forces at work, and the messiness, in some cases arbitrariness of local details, of conflicts and co-operations, etc. Together with work of Dawkins, some of the clearest explanations of evolution.

While at it, I opened Darwin’s original and skimmed about a third of it. The first impression of “The Origin of the Species” is how readable and accessible it still is almost 160 years after its writing. It is organized in a logical way, starting with a wealth of examples, building up evidence until the inevitable conclusion almost presents itself. Especially interesting is the frankness with which Darwin discusses possible objections to his theory. One must agree with the reviewer who writes about the Origin of Species that it “makes me proud to be a human being”.

Ha-Joon Chang: Bad Samaritans: The Myth of Free Trade and the Secret History of Capitalism

Funny and accessible book with critique of globalization and mainstream economic theories. Main thesis could be summed up as: countries that are rich today became rich through policies and strategies that are in many ways the opposite of what they propose as development strategies for poor countries. Many contrarian ideas (e.g. corruption not automatically bad, etc.), most of them well argued. Enjoyed chapter that ridicules “culturalist” views in economics.

Douglas R. Hofstadter, Daniel C. Dennett:

The Mind’s I: Fantasies And Reflections On Self & Soul

Charming collection of essays and short literary works loosely around the topics of the mind, consciousness, identity, etc. The essays are from various sources, including fiction authors like Stanislaw Lem or Jorge Borges, or the authors themselves, who also comment on the essays of others. Favourites: Hofstadter’s tortoise and achilles dialogues, “A Conversation With Einstein’s Brain”, Smullyan’s “Is God a Taoist?”, etc.

Bertrand Russell: The Conquest of Happiness

Lighthearted essays — sometimes surprising opinions (e.g. praise of boredom, etc.), tries to understand the sources of happiness and unhappiness. Reaches a similar conclusion as Csikszentmihalyi’s “flow”-theory, his “recipe” summed up as: skillful preoccupation – such as the exercise of a craft + a friendly interest and curiosity towards people and phenomena. Written almost 100 years ago, still timely, notices some changes in society that are even more profound today.

Randall Munroe: What If? Serious Scientific Answers to Absurd Hypothetical Questions

Bought as gift, but ended up reading it myself. Predictably funny and interesting.

Robert Musil: The Man Without Qualities

Masterful, gigantic, complex, fragmentary, sometimes with quirky humor, etc. Have been reading it on and off for the whole year, and will be continuing it in 2016 as well :)

An enthusiastic review (apart from the first 5-6 mins): http://french-italian.stanford.edu/opinions/shows/eo10021.mp3

David Nicolle: Ottomans: Empire of Faith.

Short history of the Ottoman Empire. Especially beautiful maps and illustrations.

R. Feynman: “What Do You Care What Other People Think?”: Further Adventures…

The first part contains anecdotes and letters in the spirit of “Surely you are joking…” and similar books, with some overlap. The second, more interesting part is about the Challenger space shuttle accident investigation in which Feynman was involved. The methodical inquiries and observations are fascinating, as well as the final report.

Sorin Mitu:

Transilvania Mea (English: My Transylvania)

A collection of articles and essays (in Romanian) about various aspects of the history of Transylvania and its inhabitants. Informative and beautifully written.

Elemér Kiss: Mathematical Gems from the Bolyai Chests

This book is the result of the in-depth study of the unpublished manuscripts of the mathematician János Bolyai, and his correspondence with his father, Farkas Bolyai. The book convincingly argues that contrary to popular opinion, Bolyai continued productive mathematical work throughout his life (after his early work on non-euclidean geometry), and working in isolation, he made independent observations esp. on number theory and the theory of complex numbers, some of which were only discovered later by others. (I read the Hungarian version.)

——

(disclaimer: the following book cover images are “Amazon Affiliate” links. If you click them and buy a book, I will receive a few cents in the form of an Amazon coupon. If you dislike this idea, you can simply remove “mybookbox-20″ from the end of the URLs.)

August 13th, 2015 — Math

hardy (adjective):

: able to live through difficult conditions (such as a cold winter or a drought)

: strong and able to accept difficult or unpleasant conditions

(Merriam-Webster)

G.H. Hardy was an eminent English mathematician, also known for his popular and influential book “A Mathematician’s Apology“.

In this extended essay he argues among other points that mathematics has a deep intrinsic beauty, and it is worthy to be pursued for its own sake.

I tried very hard to like this essay, but failed miserably on every occasion. It would be too easy to mock from our 21st century perspective Hardy’s mistake when he uses number theory as an example branch of mathematics with absolutely no practical applications (which by the way Hardy views as a huge positive). However, I also find the main point about mathematical beauty I mentioned before not very convincingly presented, even if you already agree with him (like I do) before opening the book.

But what I disliked most were Hardy’s strong opinions such as “there is no scorn more profound, or on the whole more justifiable, than that of the men who make for the men who explain. Exposition, criticism, appreciation, is work for second-rate minds”, and in another paragraph “it is a tiny minority who can do something really well, and the number of men who can do two things well is negligible” and finally the notorious “mathematics is a young man’s game”.

To express my dislike of this essay, I am making the modest proposal of a Hardy prize in mathematics, to be awarded to people who achieve significant mathematical results in ways contrary to the letter and the spirit of Hardy’s book (we can never know of course, whether Hardy himself would consider the results of any significance, although we can guess with good confidence.)

More positively, the fictional Hardy prize recognizes:

- pure mathematical work deeply influenced by practice and applications or by teaching and other expository work

- mathematicians (men or women) who started their mathematical career or achieved their most significant result at a relatively late age

- mathematicians who went about their mathematical career in a less than straightforward way, possibly having strong interests or accomplishments outside mathematics, possibly doing mathematics as a hobby

Ignoring historical examples (some of which Hardy must have known), as a small (and quite random) initial sample from our times I would suggest as first recipients: Persi Diaconis, Marjorie Rice, Preda Mihăilescu, Yitang Zhang, Raymond Smullyan.

This post is of course only half-serious (unless someone takes the initiative in such a direction). Would such a prize make sense? This post benefited from discussions with colleagues, who would probably prefer to stay unnamed. I was also informed by this post.

August 12th, 2015 — Math, Research

Recently, together with my colleagues Parinya Chalermsook, Mayank Goswami, Kurt Mehlhorn, and Thatchaphol Saranurak, we published three papers about binary search trees. All three are available on ArXiv as preprints. In this post I briefly and informally describe some results and ideas from these papers.

Binary search trees (BST) are perhaps the simplest data structures that are not entirely trivial, and they form the basis of several more advanced data structures and algorithms. BSTs are part of every computer science curriculum, and there is a rich theory describing their properties. Surprisingly, many aspects of BSTs are still not understood, so they are an active field of research.

Searching a single element in a BST is straightforward, and the cost of the search depends on how far the searched element is from the root of the tree. If we search more than one element, we might want to change the tree after each search, to make future searches more efficient. What is the best way to transform the tree after each search? The splay tree of Sleator and Tarjan from 1983 gives one possible such strategy. Splay trees have many nice properties, for example, they are as good as the best static tree for a given sequence (“static” here means not changed between accesses, and I am sweeping some details under the rug here). Sleator and Tarjan conjectured that even more strongly, splay trees are as good as any “dynamic” tree, i.e. any sequence of changes in the tree between accesses. This last statement is the famous dynamic optimality conjecture. If true, this would be quite surprising: splay trees just “react” to each access, without knowing what accesses will come next. The conjecture says that knowing the future doesn’t help much. Even the best strategy for changing the tree (tailored to a given sequence) cannot beat splay trees (again, some details omitted here). The conjecture is wide open. For more information about such questions I recommend the survey of Iacono. Now our recent results.

1. Self-adjusting binary search trees: what makes them tick?

Splay trees are considered quite mysterious: they execute some local transformations that just “happen to work”. We know that splay trees have many nice properties (including the static optimality mentioned before), but the proofs are a bit unintuitive. What is special about splay trees that gives them these properties? Are there other algorithms that have similar properties?

So we look at a general class of algorithms that preserve some properties of splay trees and relax others. Such an algorithm accesses an element in a BST, then transforms the search path into some tree, such that the accessed element becomes the root. We identify some simple conditions of this transformation that are sufficient to guarantee that the algorithm shares some of the nice properties of splay trees. We look at splay trees in a different way, that makes it obvious that they fulfill these conditions. We also show that some other known algorithms and heuristics fulfill these conditions, which explains why they are efficient. We also identify some new heuristics that fulfill the conditions but have not been studied before. Finally we ask, are our conditions necessary for a BST algorithm to be efficient? In a limited sense, we show that they are, but the results in this direction are not conclusive. See the paper for details.

2. Pattern-avoiding access in binary search trees

I mentioned that splay tree is conjectured to be as good as any other BST algorithm, even those that can see into the future, for any possible sequence. However, for most “random” sequences, even the theoretically best BST algorithms are quite inefficient, making the question vacuous in that case. The interesting cases are those sequences that have “some useful structure” that BST algorithms can exploit. So, if we want to show that splay trees (or some other algorithm) are efficient, we need to show that they do well on such “structured” input.

The literature on dynamic optimality describes a number of such “useful structures”. One example is “dynamic finger”: loosely speaking, if successive searches are for values that are close to each other, then search should be efficient. A special case of this is “sequential access”: if we just search the values in increasing order from 1 to n, then search should be really efficient.

In this paper we describe a new kind of structure, that has been studied in depth in mathematics, but not in the context of BSTs. We show that search sequences should be executable efficiently by a BST algorithm if they are free of some fixed pattern. See this page for a description of patterns. This generalizes the “sequential access” mentioned before in a different direction, and it also includes other known structures as special case.

We explore this topic in detail in the paper, giving some general results, and some stronger results for special cases.

3. Greedy is an almost optimal deque

Again, studying input with some structure, we look here at a sequence of insert and delete operations into a BST, with the restriction that we can only delete or insert “at the two ends”, that is at values that are the current maximum or minimum of the values in the tree. Such sequences are called deque sequences. It was conjectured a long time ago by Tarjan, that splay trees can execute deque sequences very efficiently (in linear time overall). This is not yet known, but the known results are so close to this, that the difference is only theoretically interesting (which makes it even more interesting :). In this paper (and the previous one) we look at a different algorithm, introduced independently by Lucas and Munro a few years after splay trees, and later extended by Demaine, Harmon, Iacono, Kane, and Pătraşcu. We show that this algorithm (called Greedy BST) is almost linear on deque sequences. This “almost” again hides a small factor that is only of theoretical interest, although a little bit larger than the corresponding factor known for splay trees. The details are in the paper.

December 26th, 2014 — Links, Reading

(disclaimer: the book cover images in this post are “Amazon Affiliate” links. If you click them and buy a book, I will receive a few cents in the form of an Amazon coupon. If you dislike this idea, you can simply remove “mybookbox-20″ from the end of the URLs.)

Ha-Joon Chang: 23 Things They Don’t Tell You About Capitalism. A series of observations about the world economy, capitalism, free trade, planning, development, inequality in society, etc. – the chapter titles sound contrarian and provocative, but the opinions are mostly common sense and argued with clarity although not equally convincingly – many of the statements are challenging mainstream economics dogma, but the author does construct a few strawmen too. One of the recurring themes is that economic systems are never of a “pure” kind as described in textbooks, instead, they are both messier and more interesting. Readable, pragmatic, non-ideological. Some of the ideas reminded of NN Taleb’s work.

Michael Nielsen: Reinventing Discovery: The New Era of Networked Science. The book describes several recent (and some less recent) developments and projects that have changed how scientific research is done and to some extent make us think about what scientific research even means. It presents a compelling vision of the future of science and it is a “calls to arms” to embrace and popularize these new ideas and techniques, and to invent new tools.

More concretely, the book talks about open access publishing (e.g. arXiv), open source software (e.g. Linux), online collaboration (e-mail, wikis, forums, but more specifically the recent Polymath project), “data-driven intelligence” (predicting influenza, automated translation, Sloan Digital Sky Survey), citizen science (protein folding, amateur astronomy), etc. etc. The book also discusses limitations and obstacles, often due to misaligned incentives in the academic world. The book is excellent, it inspires and motivates — two minor criticisms: selection is somewhat arbitrary – could have included other projects too, and the overall narrative that all these fit into a common thread is slightly forced.

David Gale: Tracking The Automatic Ant: And Other Mathematical Explorations. A book that contains puzzles, interesting mathematical ideas and results, short opinions on various aspects of mathematics. The topics are loosely connected, the format is similar to that of Martin Gardner’s or Douglas Hofstadter’s collections. A common theme throughout the book is the exploration (often computer-assisted) of large mathematical structures, such as the solution space of a puzzle, particularly when classical approaches fail. It is one of the best recreational mathematical books I’ve ever read, covering a rich variety of topics, difficult to summarize in a review.

Donald E. Knuth: Digital Typography. A collection of Donald Knuth’s articles and essays on the topic of digital typography, mostly related to the creation of the TeX and Metafont systems, and the transformation of digital typesetting (esp. of math-y text). Wonderful in its attention to detail (there is a chapter on the letter S). I found the linebreaking algorithm and the design of the AMS Euler font in collaboration with Hermann Zapf especially interesting, as well as the whole idea of parametrized fonts. We get glimpses into the intricacies of typography, where nothing is as simple as it seems from the outside.

Masha Gessen: Perfect Rigor: A Genius and the Mathematical Breakthrough of the Century. I read the German translation of this biography of Grigory Perelman. What I liked:

– the fascinating story of Perelman with interesting facts and details I didn’t know before

– interesting facts about the school system and the academic world in the Soviet Union

What I didn’t like:

– fascination with Clay Prize and other external accolades

– the author somewhat unsympathetic to Perelman and to mathematicians in general – taken out of context any personal quirk can be made to look monstrous (I quite agree with this review here).

– occasional “narrative fallacy”, attempt to make various facts fit together smoothly

William Aspray: John von Neumann and the Origins of Modern Computing. A book about the life of John von Neumann and his contributions to several fields, with special focus on computer science and numerical calculus, as well as about his work in science planning and management. Unusually for a biography, the book gets quite a bit into technical details (which I liked), and tries to trace the evolution of von Neumann’s thinking on various topics. The book focuses on his work and not so much on his personal details, when it does “get personal”, it is almost universally positive, leaving any unflattering aspects to other biographers.

Andrew Hodges: Alan Turing: The Enigma. Less worldly-smart and successful than von Neumann, but his work just as important to the foundations of computer science. Also the intellectual interests of these two men are somewhat parallel, going from mathematical logic to the theoretical foundations of computing, to the engineering task of actually building computers, and finally to biological systems.

This biography is incredibly detailed and well documented – not only discusses technical ideas, but also sets the broader context of the intellectual currents of the day. Describes the life and thoughts of Alan Turing (reconstructed mostly from letters) to an extreme level of details. The parts about the work at Bletchley Park on breaking the Enigma are very interesting. Also the parts about the Colossus and Ace computers – the technical details and decisions are interesting but in some places the administrative back and forth gets bit too tedious. In summary, this is a definitive biography that paints a comprehensive picture of Alan Turing.

Martin Aigner, Günter M. Ziegler: Proofs from THE BOOK. A collection of some of the most beautiful mathematical proofs. I was at first suspicious that such a concept would work as a book, and the selection is of course somewhat subjective, but the book is in fact excellent, and seems accessible for the most part from high school level onwards (requiring significant effort of course, but repaying it well). I think this book is one of the best possible gifts to anyone who loves mathematics.

Andreas M. Hinz, Sandi Klavzar, Uros Milutinovic, Ciril Petr: The Tower of Hanoi – Myths and Maths. I reviewed this book for William Gasarch’s column.

Most people remember the Tower of Hanoi puzzle as something quite simple, interesting mainly as a textbook example of recursion, and as an example of a problem where powers of two appear naturally. In reality, as the book demonstrates, the Tower of Hanoi has a very rich mathematical structure, and as soon as we tweak the parameters we surprisingly quickly find ourselves in the realm of open problems. The rest of the review can be read here.

Günter Ziegler: Darf ich Zahlen? (German). A nice, light-hearted book amout mathematics and the process of doing it, also containing anecdotes about famous mathematicians. Makes a nice present and good for learning German.

Früher war noch viel mehr Lametta (German). A pleasant collection of short-stories loosely related to Christmas. As the stories come from different sources and styles, the difficulty of their German is very uneven – so it is quite good to test and improve language skills.

Michael Kohlmeier: Sagen des klassischen Altertums (German). The myths and stories of classical Greek antiquity retold in an informal style with insight and wit. Based on a popular radio and TV show of the author. The book is quite amazing, and good for practicing German, although the informal style does not necessarily make it very easy to read.

Franz Kafka: The Metamorphosis. A classic about which nothing can be said that hasn’t been said already. I found it rather disturbing in places. Also, of the classics, maybe among the more easily accessible in German. 4/5

Paul Auster: The Invention of Solitude. A novel developing some autobiographical themes and stories – the first half is in a straightforward memoir format, the second part is a fictitious “book of memories”, written in third person and mixing storytelling with essayistic parts of varying depth. Overall, the main theme of the book is fatherhood and the relationship between father and son. As such, I found it insightful and moving in places, with good stories to tell, a bit sentimental and overwritten in others. 3/5

Charles Bukowski: Women. The thinly veiled autobiographical (anti)hero of the book, Henry (Hank) Chinoski describes himself as M Sade without the intellectual depth. Maybe the same applies to this book and Bukowski himself. A more generous critic calls him the great de-Disney-fier of our age. A bit annoying narcissism, good storytelling and turns of phrase. 3/5

Kurt Vonnegut: Cat’s Cradle. I found this book both very clever and very entertaining. On the surface a short and funny Dr Strangelove story – describing as an aside possibly the best fictitious religion ever created, and throwing in concepts that haunt you long after finishing the book: karass, duprass, granfalloon, wampeter – several layers of satire intermixed with insight on human character and society. Like all good magic realism, walks the fine line between cynicism and significance. 5/5

December 16th, 2014 — Links, Reading

October 22nd, 2014 — Tech, Visualization

Cuckoo hashing is a simple and elegant method for collision-resolution in hash tables. The resulting dictionary data structure is easy to implement, quite efficient in practice and it is also interesting theoretically (there are several interesting open questions about its properties).

I made a small visualization of how Cuckoo hashing works. The demo uses JavaScript and HTML5 Canvas, so it should work in most browsers. Let me know if you find it useful or if you have any feedback.

The demo is loosely inspired by Jason Davies’ Bloom filter visualization. Here is my old post about Bloom filters.

September 3rd, 2014 — Puzzle

I made a small logic game/puzzle called Recursi. It consists of independent “memory” games with two pairs of cards each. These games are nested recursively, behind each game there is a card of the game at a higher level. If you find two pairs, you get to flip the card at a higher level behind the current game. Sounds like fun? :) Go ahead and try it. Recursi, a fractal memory game.

December 28th, 2013 — Links, Reading

Here are a bunch of articles/essays/comments I read, enjoyed and bookmarked in the past two years – some of it is deep, some shallow, some funny, some sad, some inspiring, some cynical. Let me know if you find something particularly interesting here, or if you particularly dislike some article. Enjoy! (previously)

Biographies/Interviews/Reviews

Mandelbrot, Zhang, Wolfram, Higgs, Erdős, Erdős and Graham, Erdős, Erdős, Frankl, Linus, Alan Kay, Coppola, Bellard, Kolmogorov, Taleb+, Taleb-, Zeilberger

Motivation/Productivity/Philosophy

Derek Sivers: It’s all who you know?

Leo Babauta: Tips to simplify

Philip Guo: Productivity tips

James Altucher’s Anti-tips (funny writing)

Legacy of the 60s

Dokkōdō

Comments/threads: great comeback, too old, burnout, quality

Math/Research/Teaching

The Hardy-Littlewood axioms of collaboration

Luc Devroye’s authorship commandments

How to read mathematics

How to write mathematics

Emancipatory aspects of mathematics

The prime gap story

Bernard Chazelle: the Algorithm

Timothy Gowers: examples first, trivial mathemathics

Oded Goldreich: advice, stories, awards

Gödel’s letter to Neumann

Erick Carreira’s “work ethic” letter

A critical reading of Hamming’s “you and your research”

Douglas Comer: CS identity-crisis, measuring research

A common statistical error

Dijkstra: teaching formal methods, course organization

Terence Tao: what is good math, partial progress, rigour

Technology

James Mickens: The Slow Winter – witty and insightful essay on computing speed

What’s wrong with C++ (in summary)

Programming and personality traits

Early job ads: MS, Amazon

A cold look at startup jobs

Solutions to spam

Real artists ship

Pinboard architecture and interview

Zed Shaw programming advice

37signals writing on bootstrapped business

Comments/threads: social networks and TV shows, acquihire, ERP software, niche-vs-universal

Random Opinion/Linguistics/Humor/Music/Misc.

Contronyms: 1 2 3 4

Malthusianisms

Robert Dickau’s tweets – an explosive concentration of self-deprecating humor

Raganwald: the internet made me sad today

G.G. Marquez: The solitude of Latin America

Top ten reasons to play Go

Chris Lightfoot: real pirates and copyright “pirates”

Miles Davis blindfold listening

Bernard Chazelle: My 29 favorite pieces by Bach

The worst argument in the world

Language modularity

Moravec’ Paradox – the only lucid explanation I know of why perception is harder than chess

Notch: I love you, dad

If by Whiskey…

Slavoj Zizek: Shostakovich in Casablanca

William Gibson: the Net is a waste of time (1996)

Linds Redding: A lesson in perspective

Charles Bukowski: People empty out

Nassim Taleb: Understanding, or Convexity

Doron Zeilberger’s favorite quotes

Ribbonfarm: Hacking the non-disposable planet